For the past few years, we have seen the evolution of video cards with each generation of video cards. The first generation was not much more than a card that did nothing more than output digital video. The second generation was a card that could output digital video and some simple 3D effects. The third generation was a 3D graphics card that was the main focus of most video cards. The 4th generation was an evolution of the 3D graphics card. The 5th generation was the first to introduce x86 architecture, a programming language, and a set of libraries. The 6th generation was the first to introduce stereoscopic 3D video. The 7th generation was the first to introduce Shader Model 4.0, which is the latest version

The evolution of video cards has seen a few significant changes over the years, but the biggest difference between modern and ancient video cards seems to be the cooling. The first dedicated video cards were cooled by a simple fan, and their performance was adequate for most consumers. However, as the computing power of video cards increased, manufacturers began incorporating a heatsink with fans, and an additional heatsink to cool the video card’s memory chips. The end result was a card that was more powerful on paper, but more difficult to keep cool in the real world.

The evolution of video cards has been a long and grueling process, but we’ve finally arrived. There are more powerful GPUs on the market today than ever before, and the race is on for more powerful ones in the near future. This means it’s time to look back at how the industry has progressed, and what it will look like tomorrow.

The computer technology of operating systems such as Windows is constantly evolving and has changed dramatically over the past 30 years: Components have become smaller and more powerful, moving from displaying text strings to creating photo-realistic scenes in real time. The graphics card is an important part of computer hardware. The graphics card is a printed circuit board with a number of chips the size of an index card. It converts the video into electrical impulses and transmits them to the screen. This technology is fast enough to handle games, movies and other multimedia files. The appearance and design of the graphics cards have also changed significantly: Manufacturers have improved PCB components and experimented with cooling solutions. While many companies have tried to enter the GPU market, Nvidia and AMD have generally monopolized it.

1940: Departure

As for the history of video cards, in 1940 the US Army used the first video card of the computer system Saga as a flight simulator, and the graphic display showed only 256 colors.

1980: The rise of the video card

1981

IBM introduces a monochrome display adapter. It was one of the first display adapters, although not really considered a graphics card. The main feature, however, was the display of 80 columns of text and symbol characters on 25 lines. The CT5 flight simulator was also built for the US Air Force at a cost of $20 million by Evans and Ivan Sutherland’s computer graphics company. The simulator, which ran on a DEC PDP-11 minicomputer and was equipped with three CRT monitors for realistic training, was state of the art.

1983

In its seventh year, Intel released the iSBX 275 graphics card, a semi-revolutionary gadget that could display eight different color resolutions at 256 x 256 pixels, a milestone in the graphics revolution.

1988

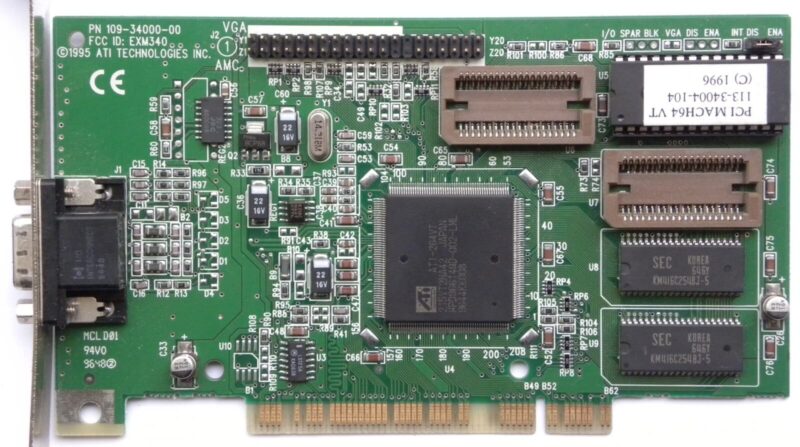

ATI, based in Canada, has made a name for itself in the global graphics processor industry with devices like the VGA Wonder. This card supported 16-bit colors and 2D rendering, and was the basis for many graphics cards released around the turn of the millennium. The VGA Wonder, with a mouse port built into the board, was the invention of this company, which maintained its innovative brand in the early days of the PC.

1990s: Periods of significant progress

1992

OpenGL 1.0, a platform-independent application programming interface (API) for 2D and 3D graphics cards, was introduced by Silicon Graphics Inc (SGI). OpenGL was originally developed for UNIX-based professional businesses. However, it is supported by developers and has been quickly adopted for 3D games.

1996

The 3dfx Voodoo1 was the first 3D game console with a 3D processor, 4 MB RAM and a central clock of 50 MHz. Voodoo1 did not support 2D graphics at all, forcing users to use a separate 2D card. But that didn’t matter, because the gaming community was waiting for technology that could match the 3D graphics of successful first-person shooters like Doom, Quake and Duke Nukem 3D.

1997

With Nvidia’s Riva 128 graphics card, the company made its entry into the 3D graphics sector by competing with the Voodoo 1. The 1996 NV1 was the first Nvidia product to fail miserably. It was a collaboration with the new Sega company, used squares instead of triangles, had inadequate sound and was disappointed with the Sega controllers. Nvidia quickly learned from its mistakes and moved on to the Riva 128, which eliminated the quadcopter, sound, and Sega binding, but did not initially offer better performance. However, when Nvidia released updated drivers, the card moved up the lists. His 3D game didn’t come close to Voodoo, but many found it entertaining enough.

1998

Then came the Voodoo2 graphics card from 3dfx with support for 1024 x 768 resolution. This was an innovative card with three processors, with two cards running in parallel in the same computer to enhance the high resolution support of the previous card. Together they delivered the best 3D graphics performance of the time. Unfortunately for them, this was the second and last absolutely amazing product from 3dfx.

1999

The future came in the form of Nvidia’s GeForce 256 (DDR version) card, often called GPU. The GeForce could handle at least 10 million polygons per second and supported DirectX7. The Nvidia GeForce 256 features a CPU cooler and DirectX 7 capabilities.

21. Century: Virtual reality Direction

2002

The ATI Radeon 9700 graphics card is built using 150 nm process technology and supports Direct3D 9.0 and OpenGL 2.0 for high performance.

2004

The Nvidia GeForce 6 series 6600 and 6800 have been introduced by GeForce. The original 6800 is a popular card among overclockers. RivaTuner is an application that allows you to overclock your computer. SLI lets you use PureVideo technology with multiple graphics cards. If a software change has caused artifacts on the graphics card, you can simply restore the default settings.

2006

GeForce releases the GTX 8800, the best graphics card in history. With 129 stream processors, a 575MHz core clock and 768MB of DDR3 memory, this thing was Nvidia’s internal beast. The texture fill rate is 3.68 million per second. The Nvidia GeForce 8800 GTX was a powerful graphics card for its time, capable of consuming a lot of power and running the most demanding games for a long time.

2009

Before AMD bought ATI, there was the Radeon HD 5970, one of ATI’s newest products. The dual GPU card has been called a monument to 3D redundancy and even a monster according to some. It weighs a whopping 3.5 kg, which is pretty heavy for a graphics card. It offered a dual GPU system with unmatched performance and was ATI’s best-selling flagship graphics card. He was so well built and so formidable that he is still available today.

2012

2012 was the beginning of a rivalry that continues to this day. With the HD 7970, Radeon has created a powerful card based on the AMD Graphics Core Next (GCN 1.0) architecture and manufactured using 28nm process technology. The GPU is built with Nvidia’s Kepler architecture and 28nm manufacturing technology, which has been around for two generations.

2013

The AMD Radeon R9 290 is here, a powerful high-end GPU that keeps pace with current GPUs and stands its ground in new games. The Nvidia GeForce Titan has a supercomputer-like architecture. The GeForce Titan was a colossus of ingenuity and design, with seven billion transistors, six gigabytes of RAM, a water cooling system, and supercomputer design in a surprisingly small package.

2014

Geforce has released the Evga GeForce GTX 750 ti. Its low power consumption and capabilities surpass those of its predecessors, so you can enjoy games even at 1080P. It also has 2GB of GDDR5 RAM at 5400MHz and quad pump. Nvidia’s GeForce GTX 970 Maxwell graphics card is a big hit with gamers as an expensive 1080p GPU option.

2016

Nvidia has announced the GeForce GTX 1060, a mid-range graphics card with 3 and 6 GB of memory. Nvidia’s cooling system allows the card to be significantly overclocked, and the GTX 1060 is Nvidia’s lowest VR-compatible graphics card. The Pascal architecture on which the GeForce GTX 10 series is based was created using a 16nm manufacturing process. Nvidia’s best graphics card is probably still the GTX 1060. In addition, Nvidia also released the Nvidia GeForce GTX 1080 in 2016. The Pascal architecture on which the GeForce GTX 10 series is based was created using a 16nm manufacturing process. The flagship GeForce GTX 1080 features 8GB of GDDR5X VRAM and can compete with SLI or CrossFire sets as a standalone solution for 4K gaming.

The Polaris-based AMD Radeon RX 480 is aimed at mid-range gamers who need high performance and is based on 14nm technology. Besides gaming, another amazing use of graphics cards these days is the fact that you can use them in design programs like Movavi or for video editing. Computer Hope and HiTech Wizz are two places you can trust to buy your next graphics card.When the first video cards came out in the early nineties, the primary function of the cards was to display video and a secondary function of the cards was to be used as a MIDI controller. As time went on, the primary purpose of the card became more and more dominant, and the secondary purpose of the card became less and less important. This was the case with video cards for a while, but the last few generations have come on the right track, and have been smart about the secondary purpose of the card.. Read more about 2d graphics card and let us know what you think.

Frequently Asked Questions

What was the 1st graphics card?

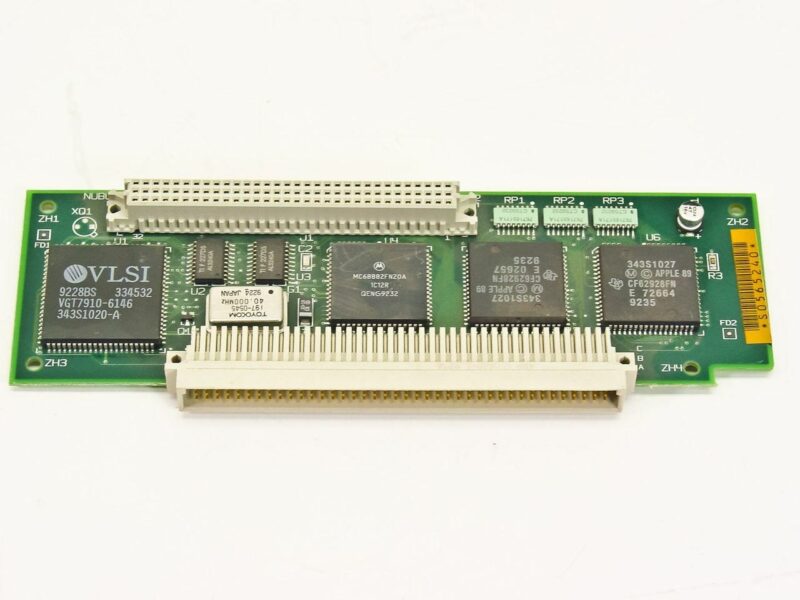

In the early days of PC gaming, games had to be designed around the hardware available at the time. The processors were slow, and graphics were far from optimal. As time went by, video cards were steadily improved and eventually surpassed the limits of their predecessors. The birth of the graphics card is an important milestone in the overall processing history of personal computers. Although it was conceived before the first personal computer, it was not until the introduction of the Motorola 68000 in 1982 that the first graphics card was conceived.

Who invented video card?

One of the most noticed trends in the world of video cards was the introduction of 3D rendering. Once, these cards were basically just a bunch of little lines and triangle with a color filling in the gaps. Then, the lines suddenly started to overlap, and the triangles started to blend into one. People were amazed by how far the technology had come, and how it was now possible to create advanced 3D effects that were previously impossible. Then, the card-makers started to show even more potential. They started to create overlapping triangles, which eventually became spheres, and then they started to create cubes. People were amazed by the new technological feats, and even more so when the card-makers started to include ‘shapes’ that were not even When it comes to video cards, the history is a bit hazy. Was it AMD who started the whole thing, or was it Nvidia? What about the ATi Virge initiative, did that get things going? Some of the scene’s history has been documented, but there are still a lot of questions about the early days of the graphics card industry, and how it all began.

When was the first video card made?

Video RAM was first created in 1965 when the first DMA controller was designed by LSI Logic, now part of Texas Instruments. The DMA controller was used by a host of video game consoles to help manage data transfers between the processor and the game’s RAM. If you look at our video card chart, you’ll notice something very interesting. We’ve marked the years that we first saw chips with the ability to display video. Looking at each one, you’ll see a steady progression of chips that’s basically gone unchanged since the early ’80s.